-

Notifications

You must be signed in to change notification settings - Fork 3

Add ORT worker proxy to prevent main thread locking, and service worker to cross-origin isolate which allows wasm threads #9

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

base: main

Are you sure you want to change the base?

Conversation

|

Thanks so much! I'll try this out soon. |

|

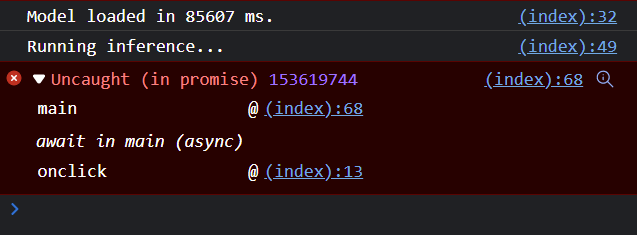

I tried to run it locally: and this error: |

|

Hmm, this could be related to your custom build of ORT Web using different Emscripten parameters (like One other guess is that your local machine has a larger-than-normal number of threads, and the normal build isn't "prepared" for that number. In that case I think it's probably a bug with ORT Web. Can you try putting the following line after the I think the default value is And also try this demo to see if it works on your machine (it takes a minute or so to init due to huge number of ops): |

|

Maybe try commenting out the The error you're getting for the super-resolution demo looks like it could be an out-of-memory error that's unrelated to what we're trying to debug here. Are you trying with Chrome? If so, that's really strange, since it works for me and as long as you've got more than 1.5GB of RAM (the limit that it looks like you're hitting) on your system, which I of course assume you do, then I don't see why it's giving you that error. |

|

I'm using Edge, so yeah basically Chrome. I have 32GB of RAM. With I do get this output with 8 threads and got some of that but less times with 2 threads: |

|

I think it's no longer using SIMD, because IIUC it should be loading This superresolution demo that I linked earlier loads

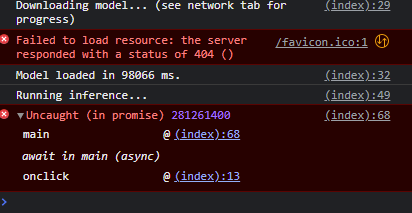

Ideally you'd be able to reproduce these bugs/errors somehow without your custom build - I think that would raise the eyebrows of the ORT Web maintainers more. Swapping out the custom build for a normal build, and your current model (with training ops) for one that works without training ops would tell you whether it's training-ops-related. Also, I realised that this error is probably not OOM-related, or at least not in the same way as that other issue I linked, since the number would indicate ~150mb, rather than 1.5gb. So if you could test that on Chrome then that would be interesting because it might be an Edge-related bug. I've tested on Edge (Linux) and it works fine for me. |

|

BTW I tried using https://josephrocca.github.io/super-resolution-js/ in Google Chrome and I got a similar error to what I got in Edge: |

Please feel free to close this if you wanted to make the changes in a different way and just use this as a reference - figured it'd be easier to add a pull request than to explain in #8